Download

Abstract

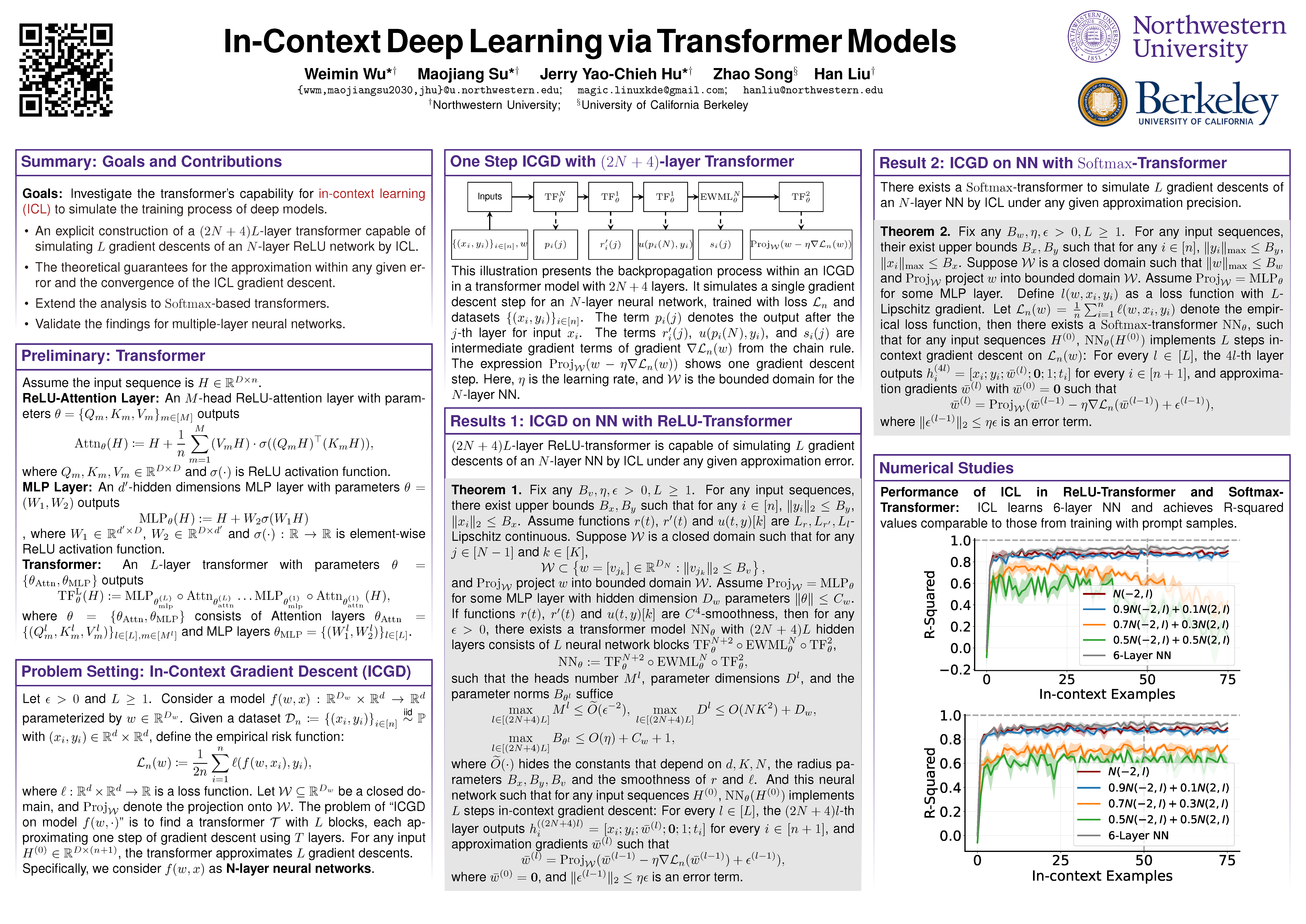

We investigate the transformer’s capability for incontext learning (ICL) to simulate the training process of deep models. Our key contribution is providing a positive example of using a transformer to train a deep neural network by gradient descent in an implicit fashion via ICL.

Figure 1: Transformers are Deep Optimizers: Provable In-Context Learning for DeepModel Training