Download

Abstract

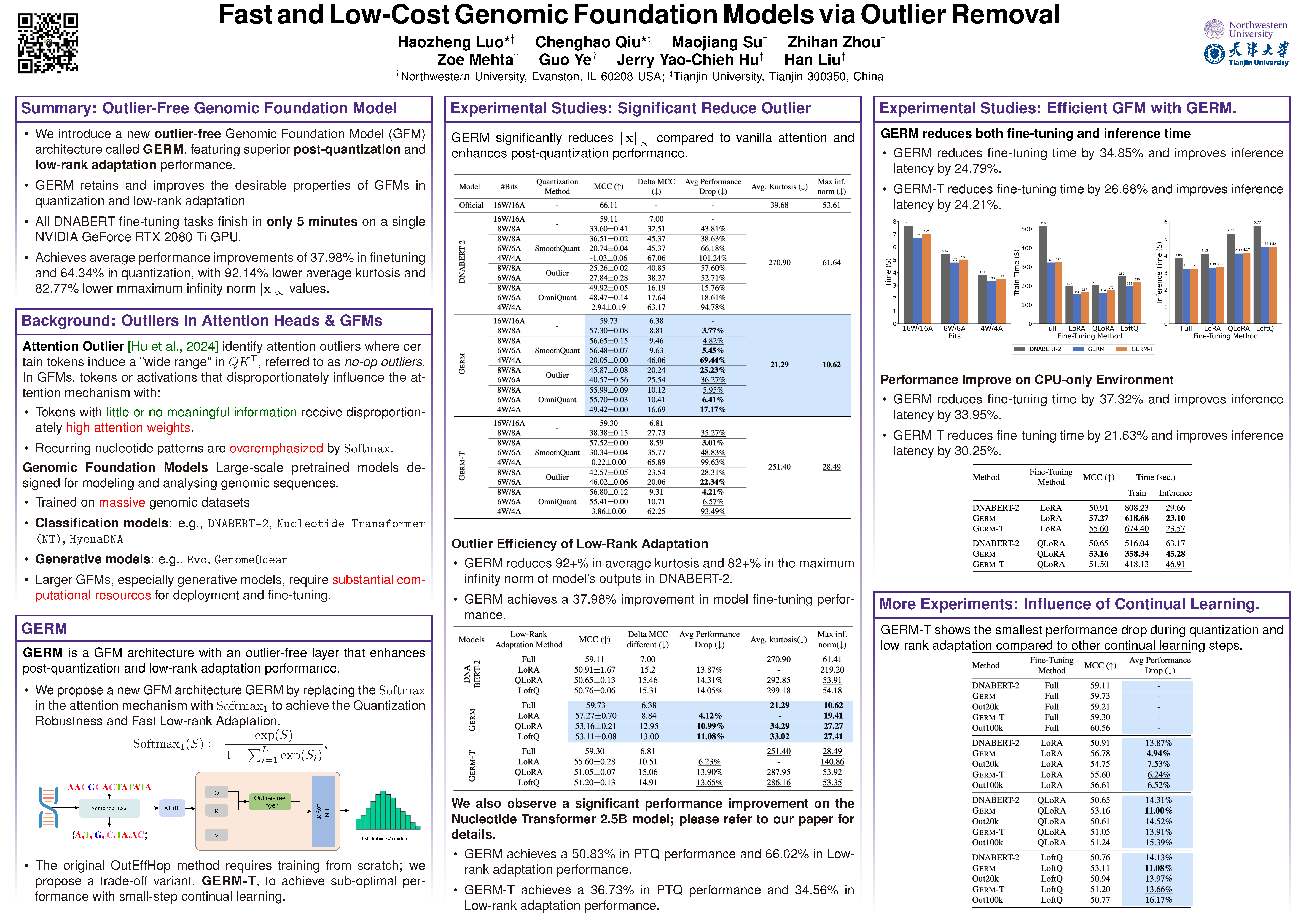

To address the challenge of scarce computational resources in genomic modeling, this paper introduces GERM, a genomic foundation model with strong compression performance and fast adaptability. GERM eliminates outliers during both pretraining and fine-tuning stages, enhancing both LoRA-based low-rank adaptation and post-training quantization robustness. Additionally, a continual learning variant named GERM-T further reduces retraining costs by applying outlier removal in small steps. Compared to DNABERT-2, GERM improves fine-tuning performance by 37.98%, quantization robustness by 64.34%, and reduces average kurtosis by 92.14%. These results demonstrate GERM’s practical effectiveness for genomic tasks in resource-constrained environments.

Figure